A step-by-step guide to an easy and controllable AI effect

As we touched on last week, from where I stand the only feasible use for AI is if you’re able to direct it.

Two of the most important things that any director needs to control are performance, and camera movement/frame composition. AI absolutely stinks at this.

Human/animal performance is floaty and warpy, and totally devoid of nuance. More and more AI models are adding in ways to “control” the camera (you can choose the kind of movement that it does), which only sometimes works, and even then it’s limited control. Real camera work involves reaction to a performance.

One huge advantage of video to video is that you can control the performance, and camera. Here’s a test using one of my children’s toys in my left hand, and my camera in my right hand. As basic as it is, there are a couple of beats that I really wanted to direct –

DIRECTING CREATURE PERFORMANCE

I gave the dragon’s roar anticipation. This is a key animation concept, where you perform the opposite of an action to give the action itself more power – like winding up a body to throw a punch, rather than just throwing an arm out. In this case, the silicone puppet allowed me to scrunch up the dragon’s face before it explodes into a roar. This is cartoony of course – he’d break every bone in his face doing it – but you’d be surprised how often we break bones even in photorealistic animation to add punch to a performance

Video-to-video is the next step, since then you can at least control the main beats of movement. This allows for much more directability. Of course, you still have the problem of AI completely replacing everything you’ve done rather than selectively changing parts!

DIRECTING CAMERA PERFORMANCE

The camera had to lag behind the performance to indicate how fast and unexpected the roar was, that it caught the cameraman off guard. This is the kind of thing that you see all the time with real camera work. An angry imposing figure will “push” the camera back (the camerman will move to accommodate them). A scared figure will be chased.

It adds up to a HUGE part of what makes a shot work – whereas if you leave AI to figure out the camera, tends to wander about aimlessly, like a drunk trying to find their way home. Which might be fine, if you were filming a drunk trying to find their way home. It’s fairly limited outside of that, though.

THE DIRECTED RESULT

I think that both those “directed” beats really help the end result. Without them, we would probably just be getting another wafty, floaty performance from both the character and the camera.

But of course, I’ve sacrificed a lot to be able to control the performance and camera. I mean…my AI dragon only develops a jaw when he roars. He’s not close to photoreal (AI struggles with photoreal fantasy creatures, since it hasn’t been trained on enough of them. There’s not enough to steal, in other words!). His background is great, but was forced on me – I didn’t ask for it.

These are just three of the problems in a list of about… 200 that you can find if you analyse the footage!

CONCLUSION

AI generated footage is absolutely riddled with issues. This will improve, but no one really knows how much.

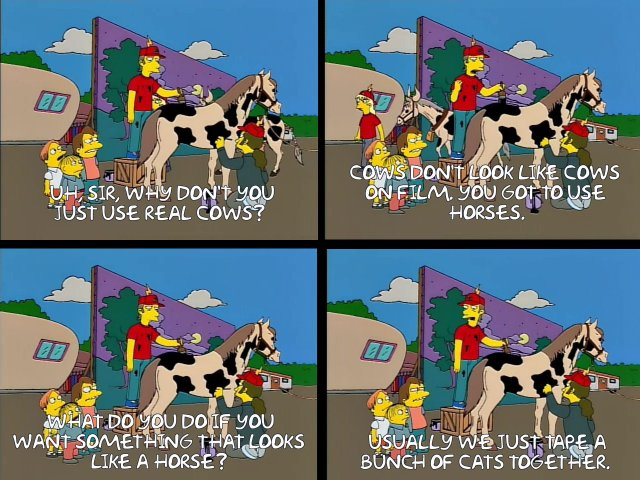

I’ve used this Simpsons example of “movie magic” before, but it’s perfect. Filmmaking is one unexpected surprise after another. Everything is riddled with issues, not just AI. The “magic” comes from finding inventive and effective ways around those issues.

There’s a million tests that you could do to try and get more control over this week’s “dragon test”. What if you generate him on a green screen, so that you could control the background? Or filmed him over a projection of the background? What if you used a more realistic puppet base that would allow you to mix and match the “real puppet” with the AI generated one? Or created the dragon using basic 3D, so that you could mix rendered 3D with the AI result? If you started the take with the mouth open, could you get AI to recognize the jaw of the dragon, rather than chopping it off, then you can just edit the mouth open bit out of the end result? At the end his jaw looks like a weird mix of teeth and gills and stretched skin. But…isn’t that actually quite cool? Isn’t there some inspiration there for a dragon that’s different to what we’ve all seen a thousand times before?

If the best that we can ever get out of AI is limited results, that’s absolutely fine. What a great opportunity to come up with creative ways to use it, and ride the next wave of “movie magic”!

I’m willing to bet that the artists who get the best leverage out of it will be using it in unexpected ways, and not just hammering out more prompts in the hope of the magic coming to their door for free.

Well, this will be the last blog for this year. I wish you all a great break, and enough rest to start the next year with renewed vigour and creativity, and I’ll see you then!